Abstract

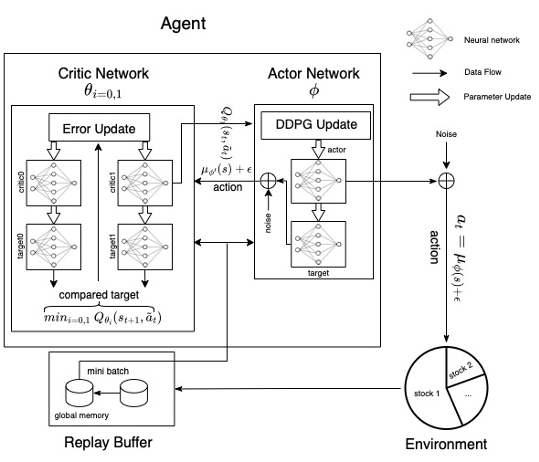

Portfolio Optimization (PO) is a financial engineering technique that rebalances resources across multiple risky assets at a particular moment to optimize returns and minimize risks. This study reveals two methods (static and dynamic) for PO. The static method utilized Maximum Sharpe Ratio to determine allocation weights that optimize prior profits. The dynamic approach employs Deep Reinforcement Learning (DRL) to build a model that analyzes stock movement and recommends immediate trading actions (buy/sell/hold). The dynamic method utilizes Twin Delayed Deep Deterministic Policy Gradient (TD3) because it performs well in continuous action spaces without producing excessive biases. The purpose of this study is to evaluate the performance of TD3 on relatively small datasets. Experiments using criteria such as expected returns and Sharpe Ratio indicate that DRL models outperform baseline models. Hyper-parameter tuning has a beneficial effect overall.